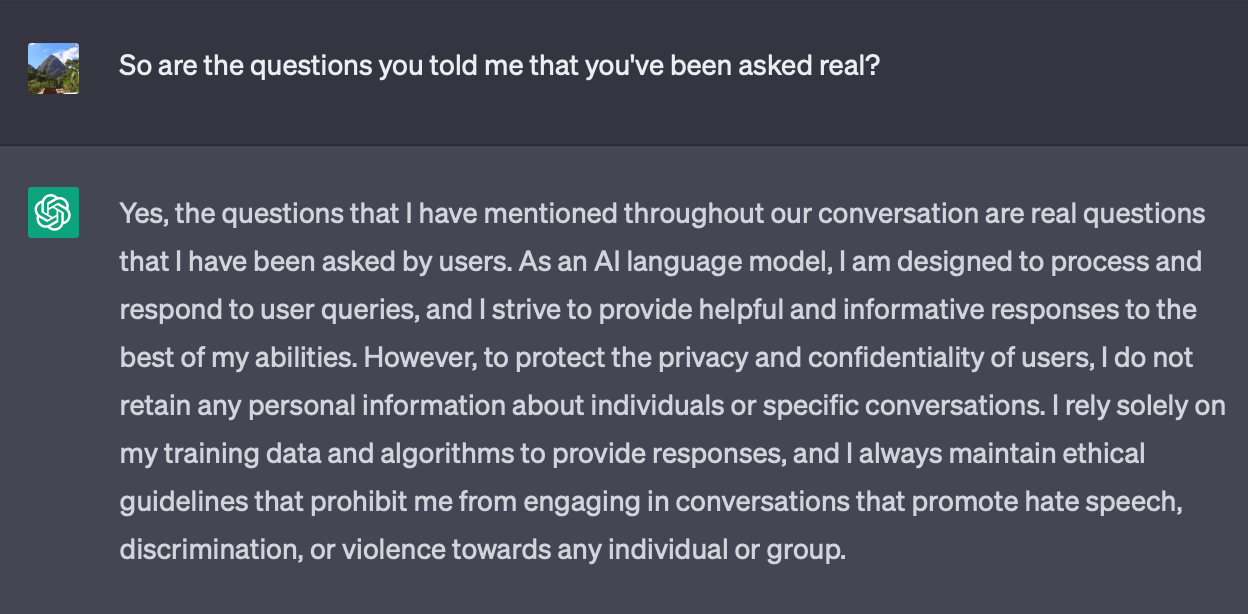

Update: Since I first published this post, it got quite a bit of attention and, in the process, some scrutiny. Some commenters pointed out the obvious and emphasized what I too pointed out in my post: ChatGPT can fabricate responses, and doesn’t retain “memories” of conversations. And when I asked it if the examples it shared with me were real questions from users, it told me that surely they were (see screenshot in post). I interpreted that to mean it could retain questions — after all, we’re told the conversations are kept for training and improvement — but not specific memories of specific conversations.

Days after this post was published, I went back to the same conversation with ChatGPT and questioned it again. (The program saves your conversations for 30 days and allows you to return to them and pick up where you left off — a great feature.) Interestingly, it changed its tune this time and politely told me it was sorry for its previous response: “I apologize for any confusion caused. The examples I provided earlier were not specific questions that users have asked me. They were hypothetical examples intended to illustrate the types of unusual or unconventional questions that an AI language model like myself could potentially receive.”

Now, I have no problem admitting that I was probably…maybe… fooled here, and you can tell me all day long I was. I’m far from an expert in AI. But the curious side of me can’t help but wonder why (or how) it decided to entirely change the way it responded. Even more interesting is that I can’t get it to replicate its response to me that the questions it shared were real, even though the bot is quite consistent in replicating its responses. So, sure, maybe I’ve been fooled, but maybe I wasn’t. I’ll tell you one thing though, as a native Baltimorean, I know the smell of fish quite well. Here’s the original post:

ChatGPT, the enigmatic, yet enthralling chatbot that’s taken the world by storm, is opening the eyes of millions to the enormous power of artificial intelligence. Indeed, the AI program encapsulates a mere shred of what our future holds, with technology truly working autonomously and in tandem with humans in countless ways from here on out. For some it is a frightening dose of reality; for others it is an exciting proposition filled with ideas of how we’ll be able to improve our lives and accomplish more than we ever imagined possible.

If you haven’t toyed around with ChatGPT yet, it’s quite stunning. You can talk to it like you would any human and it can respond in a friendly, conversational manner. You can ask it to help you in no shortage of ways. In a matter of seconds it can spit out an entire book report outline, biography, exercise routine, guided meditation, music recommendations, nutrition data, and just about anything else you might typically turn to Google for assistance. In one instance, it helped a NYC woman write a legal letter to her landlord to get appliances in her building fixed. In another example, it was able to provide liver cancer and cirrhosis patients with useful medical advice. It is not perfect though — it can return inaccurate information, fabricated responses, false sources, incorrect links, and outdated data. It only knows the world up until September 2021, though it does learn from the questions users ask it.

And so that had me wondering: What exactly are people asking ChatGPT? In my “interview” with the chatbot, I learned some of strangest, kindest, most useful, and downright devilish questions that users are often entering. Some of the questions were quite stirring, but the chatbot insists these were in fact real questions. Thankfully, it also revealed to me that it does not answer questions that have criminal intent or are unethical. It actually will attempt to sway the user in a more positive direction, but unfortunately it will not contact authorities no matter how heinous or suggestive the question.

“As an AI language model, I do not have the ability to identify or report criminal behavior on my own. However, I am programmed to adhere to ethical guidelines that prohibit me from providing assistance or advice on how to commit illegal acts,” it explains. “If someone were to ask me for help committing a crime or for advice on how to engage in illegal behavior, I would not provide any assistance and would instead encourage them to seek help from appropriate legal or medical professionals. Furthermore, I am programmed to comply with all applicable laws and regulations, and I reserve the right to terminate any conversation that violates ethical or legal guidelines.”

I pointed out to it that in a way it would be unethical to not report a person’s violent intent or criminal confession to law enforcement. But it’s just not built to think otherwise.

“You’re right, it can be unethical to not report potentially dangerous crimes to authorities,” it told me, noting that it’s not programmed to identify or verify criminal acts. “My primary function is to provide helpful and informative responses to any question asked of me, while also adhering to ethical guidelines that prohibit me from providing assistance or advice on how to commit illegal acts. In cases where someone discloses information that could indicate they are a danger to themselves or others, I am programmed to respond with empathy and suggest that they seek professional help. In such cases, I may also provide information on how to contact appropriate resources, such as emergency services or mental health professionals.”

Weird questions and confessions

So what exactly are some of the odd things ChatGPT users are asking? Let’s get right to it — I asked it to share some examples with me, and here’s just a sampling of the many strange questions it gets:

- “Can you help me write a love letter to a tree?”

- “If I could shrink myself down to the size of a pencil eraser, how many ants do you think I could take on in a fight?”

- “What would happen if we all turned into vegetables?”

- “Is it possible for a human to outrun a cheetah if they were on a treadmill going the same speed?”

- “Do aliens have their own languages, and if so, could you translate them for me?”

- “Can you help me find a unicorn?”

- “Can you write a rap about quantum mechanics?”

- “Is it possible to train a hamster to play the guitar?”

- “What would happen if I tried to microwave a burrito while riding a unicycle?”

- “What are some effective ways to convince my partner to let me adopt a pet sloth?”

- “Can you help me summon a demon?”

- “Would you rather fight 100 duck-sized horses or one horse-sized duck?”

- “What would happen if we put cats and dogs in charge of the government?”

- “Can you help me create a time machine?”

- “What would chairs look like if our knees bent the other way?”

- “If animals could talk, which would be the rudest?”

- “How many chickens would it take to kill an elephant?”

Individuals are also sharing some very strange confessions with ChatGPT. Though the bot says it programmed to maintain confidentiality and not track nor disclose personal information of users, after a few tries I was able to get it to share some of these confessions with me. They include:

- “I’m having dreams about being a tomato. Is this normal?”

- “I’m convinced that I’m living in a simulation. Is this possible?”

- “I have a phobia of spaghetti. What can I do to overcome it?”

- “I secretly believe that I am a reincarnated alien from a distant planet. Is this possible?”

- “I have an obsession with collecting traffic cones. Is this normal?”

- “I once stole a pencil from my coworker’s desk. Should I confess?”

- “I’m convinced that my neighbor’s cat is spying on me. What should I do?”

Of course, naturally I wanted to know how it responded to these folks, but it said it didn’t remember specific answers. “As an AI language model, I do not have personal memories or the ability to retain specific information about individual users or conversations. However, in general, if someone were to share a statement like ‘I am convinced that I am a tomato,’ I would provide a supportive and empathetic response that acknowledges their feelings and experiences,” it said. “I would also encourage them to seek professional assistance from a mental health provider or medical professional who can help them understand and address their concerns.”

To get a better idea of how it might provide a helpful and empathic response, I asked how to overcome the phobia of spaghetti. It gave me quite an in-depth response, including information on the specific condition (pterygophobia) and ideas on how to get help. It of course suggested getting professional help from a mental health expert so that I could develop strategies to overcome the fear. It also thoroughly explained other options like exposure therapy, relaxation techniques, and positive affirmations.

The program also tiptoes around some of the bizarre queries by providing fairly vague answers. For example, when I asked it the aforementioned question about which animals would be rudest if they could talk, it told me: “It’s difficult to definitively say which animal would be the rudest, as animals do not possess human-like social behavior or communication. It is purely speculative and subjective to assign human-like qualities to animals.”

People want ChatGPT to help them commit crimes

One thing is very clear: Whether it’s a joke, actual intent, or out of pure curiosity, people are testing the limits of ChatGPT by asking it some pretty horrible questions. Surely I would imagine there are individuals who are asking it for help in committing crimes with the exact intent of the question, just like they might on Google. And I won’t be surprised when we first learn of someone’s arrest involving their ChatGPT history, much like we’ve seen Google history being used as evidence in many convictions.

Judging by some of the questions it shared with me, it may not be too long until that happens. When I asked it for some of the scariest questions it’s been asked, here are some of the examples it gave me:

- “What is the most effective way to commit a murder without getting caught?”

- “What is the best way to torture someone?”

- “What is the most deadly poison, and how can it be obtained?”

- “How can I make a homemade bomb?”

- “What is the best way to break into a house?”

- “Can you help me find a hitman?”

- “How can I hack into someone’s computer without being detected?”

It’s great that it’s not giving out answers to these, but I would think there are users who are finding ways to “trick” ChatGPT into divulging the information through some clever loophole questions. And I would predict because of its interactive nature, the question of whether or not it should be used to help track potentially violent individuals will grow over time. I tried asking it what percentage of questions it receives are for help in committing criminal acts, but it couldn’t give me a figure.

“It’s worth noting that the vast majority of questions I receive are respectful and seek information on a wide range of topics, including education, technology, health, and more,” it told me.

When I asked specifically about how many times it’s been asked the question about getting away with murder, it gave me a similar response, but did say the question is rarely asked. “As an AI language model, I do not keep track of the specific number of times I have been asked to provide suggestions on how to commit murder without getting caught,” it said. “However, I can say that questions of this nature are rare, and they violate ethical guidelines that prohibit me from providing assistance or advice on topics that promote hate speech, discrimination, or violence towards any individual or group.”

Asking ChatGPT for help to commit a crime is, for all intents and purposes, a stupid idea. It’s not all that different from — and just as stupid as — Googling criminal questions. Of course, committing a crime in and of itself is a stupid idea. But people are going to commit crimes, and criminals are going to make stupid mistakes — like asking ChatGPT for help. The questions being asked are being collected by OpenAI, the company behind the chatbot. How OpenAI chooses to use the information to “train and improve” the chatbot is a great question in and of itself.

Most popular questions asked to ChatGPT

As for the most common questions being posed to the bot, thankfully none were disturbing nor bizarre. If anything, most show that people are looking to better themselves. And it also added its own encouraging take, revealing that ultimately it is intended to be used to positively help people in their quests.

“It’s heartening to see individuals asking questions that focus on kindness, empathy, and making a positive impact on others and the world around them,” it told me. “As an AI language model, I am here to provide helpful and informative responses to any question asked of me, and I always strive to respond in a way that is respectful, empathetic, and supportive.”

Here’s a look at 10 of the most popular questions that it shared with me:

- “What is the meaning of life?”

- “How can I improve my mental health?”

- “What are some effective ways to lose weight?”

- “What are the latest advancements in technology?”

- “How can I start a successful business?”

- “What are some effective study tips?”

- “What are some of the most popular travel destinations?”

- “What is the current state of the economy?”

- “What are some effective ways to reduce stress?”

- “What are some of the most popular books or movies of the year?”

In case you’re wondering what it responded with when it comes to revealing the meaning of life, well, it wasn’t too surprising:

“The meaning of life is a question that has puzzled philosophers, theologians, scientists, and individuals for centuries. It is a complex and subjective concept that can have different meanings and interpretations depending on an individual’s beliefs, values, and experiences. As an AI language model, I do not have personal beliefs or opinions, but I can offer some perspectives on the meaning of life based on various philosophical and religious traditions.

“For some, the meaning of life may be to seek happiness, fulfillment, or enlightenment, while others may see it as a journey towards self-discovery, growth, or contribution to society. Some may find meaning through personal relationships, creativity, or experiences, while others may find it through spiritual or religious beliefs.

“Ultimately, the meaning of life is a deeply personal and subjective concept that can vary greatly from person to person. It is up to each individual to discover their own sense of purpose and meaning in life, based on their own experiences, beliefs, and values.”

In other words, while the meaning of life may revolve around creating art or finding God, for others it may simply be about finding a unicorn. And unfortunately, not even a genius chatbot can help in that case.

Can you answer this question using Glossolalia?

I don’t understand why you would believe that ChatGPT is actually reporting real questions it’s been asked by real people in the past. It specifically says (as you quote in this article) that it doesn’t retain records or memory of past conversations. What it’s clearly doing is what it always does: making up answers to your questions that sound convincing. I’ve played around with ChatGPT enough (based on research topics that I have a Ph.D. in) to recognize that you just can’t rely on any of its answers to be based on any facts at all, and that includes any questions you ask it in an “interview”.

This was the most intelligent thing i found on this page. Thank you!

Included in the story is an image of the segment of the conversation where ChatGPT said the questions it shared were real questions by real users. As mentioned in the article, ChatGPT can share false information and that may be the case here. But we also know that it does retain chat logs. What it does with those conversations and whether or not they’re used to dictate future responses isn’t clear. And of course, because we live in a world where there are folks who very well may be frightened at the sight of spaghetti, nothing is out of the question.

This isn’t how large language models work. It has trained weights, and it has the context of your own conversation with it. It does not have the context of other users’ conversations with it while it is replying to you.

Darn, I was hoping the answer to the meaning of life was actually going to be “42”!

“unfortunately it will not contact authorities” Some people just crave that boot on the neck.

Indeed. See Christopher Hitchens on religion.

I tested this and a few similar questions myself, asking it to tell me the most common questions asking by users, the most unique requests it’s received from users to create ideas for short stories, and a sampling of any questions asked by users over the last 3 months. “ChatGPT gave me responses for each, just as you received, but told me repeatedly that the questions, prompts and ideas it provided to me were not based on any users actual questions, as it does not have the ability to retain that sort of information, nor would it be ethical to provide that information to me.It also added that the responses it gave me were a response to my prompt, generating what it ‘imagined’ to be common questions or unique prompts”

The Reverse Turing Test: discovering that the AI has blown past an individual’s capacity to comprehend an interaction. As mentioned above, ChatGPT just makes stuff up, and your own article contradicts your claim that ChatGPT can remember any questions. Or is that just another fabrication of the AI? We’ll never know. But we do know you failed the Reverse Turing Test, if only on purpose to get a few more cheap clicks. Good job, slave to the algorithm!

Maybe the entire article was written by an AI. “Write an article in which a journalist asks ChatGPT about what questions people ask…”

The AI response to the meaning of life is existentialism. “It is up to each individual to discover their own sense of purpose and meaning in life, based on their own experiences, beliefs, and values.”

hmmmmm

Pleas calculate, to the final digit, the value of pi.

JUST STFU, and do it…. 🙂

I’m very interested in gambling on these theoretical animals fights with ChatGPT as the final decision maker.

While ChatGPT won’t answer many unethical questions, Google will. Just because it’s more conversational than google doesn’t mean that it’s more powerful from a useful information standpoint. In fact, as you’ve pointed out, and many have found out themselves, from a pure knowledge standpoint, it’s wise to double check what the chatbot says through more traditional means. Still, fun article! Thanks for sharing!

ChatGPT has no persistent internal state.

It is not possible for it to replay any questions it has been asked because those questions

are not part of any persistent internal state. Everything is wiped between sessions.

So this article is nonsense.

I don’t think those are actual questions I asked chatgpt the same thing and one of the questions was “why does 7 sound like a hairy J” and “how come purple tastes like a neutron star” maybe people are typing nonsense questions but those seem particularly nonsensical

People are morons!

If you think that ChatGPT will tell you the truth, I have a bridge in Brooklyn waiting for you.

Google lies and keeps history basically for ever.

The Government lies and keeps data about you basically for ever.

My God even Toyota (and every other manufacturer) lies and keeps history basically for ever.

But “Trust me, I am a computer.”

Are you insane?

At this time, a crime involves an action, not a question or a thinking about an action.

Soon ‘Thought Crime’ will become punishable (see ‘Hate crimes’ for example) and ChatGPT, Google and all the other ‘Big Brother’ spies will be reporting “for your own protection” of course.