PITTSBURGH — Robots may soon be able to help people to do the laundry and other household tasks as a new technique has given them the sense of touch.

The system, called ReSkin, enables robots to differentiate between objects – such as thin layers of cloth – using only their sense of touch, something that is second nature for humans. However, for robots, tasks such as grabbing a glass or folding towels are “extremely challenging” until now, according to a team at Carnegie Mellon University.

The amount of data gathered through touch is difficult to quantify and the sense has been hard to simulate in robotics until recently.

“Humans look at something, we reach for it, then we use touch to make sure that we’re in the right position to grab it,” says David Held, an assistant professor in the School of Computer Science and head of the Robots Perceiving and Doing (R-Pad) Lab, in a university release. “A lot of the tactile sensing humans do is natural to us. We don’t think that much about it, so we don’t realize how valuable it is.”

To fold laundry, robots need a sensor to mimic the way human fingers can feel the top layer of a towel or shirt and grasp the layers beneath it. Researchers can teach a robot to feel the top layer of cloth and grasp it, but without the robot sensing the other layers it wouldn’t be able to grasp them or fold the cloth.

“How do we fix this?” Held asks. “Well, maybe what we need is tactile sensing.”

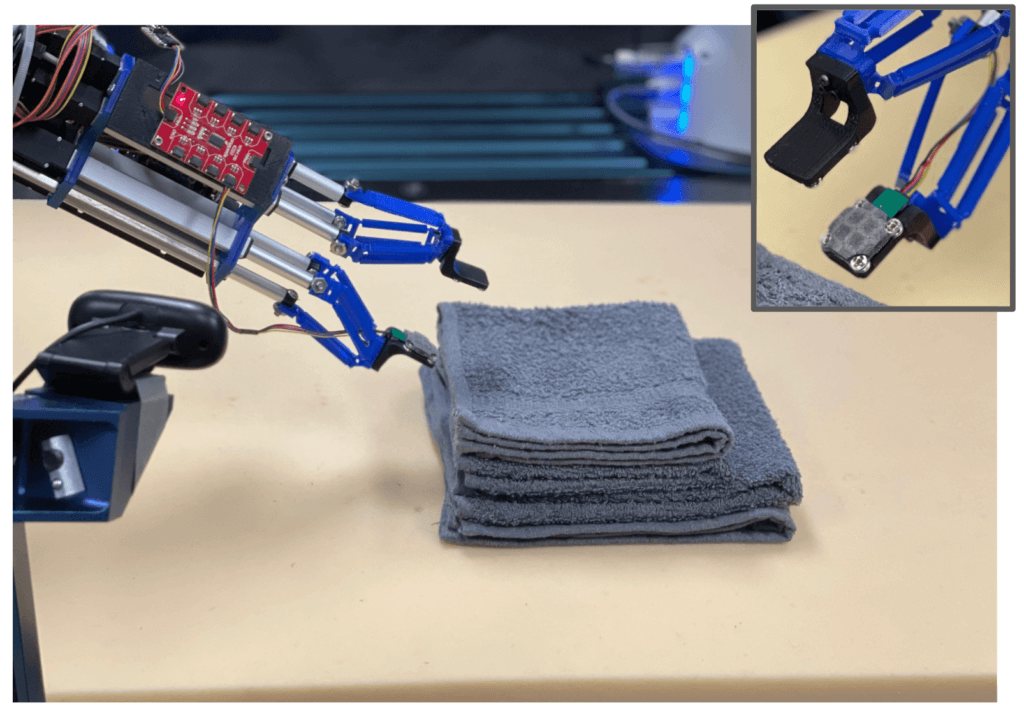

The team developed an open-source, touch-sensing skin made of a thin, elastic polymer embedded with magnetic particles that give a three-axis sense of touch. ReSkin helps the robot feel layers of cloth rather than perceiving them solely through vision.

“By reading the changes in the magnetic fields from depressions or movement of the skin, we can achieve tactile sensing,” explains Thomas Weng, a Ph.D. student in the R-Pad Lab, who worked on the project with RI postdoc Daniel Seita and grad student Sashank Tirumala. “We can use this tactile sensing to determine how many layers of cloth we’ve picked up by pinching with the sensor.”

Other research has used tactile sensing to grab rigid objects, but cloth is deformable — meaning it changes when you touch it and is therefore more difficult to maneuver. Adjusting the robot’s grasp on the cloth changes both its pose and the sensor readings.

The researchers say they didn’t teach the robot how or where to grasp the fabric. Instead, they taught it how many layers of fabric it was grasping by first estimating how many it was holding using the ReSkin sensors and adjusting the grip to try again. They then watched the robot picking up one and two layers of cloth using different textures and colors.

“The profile of this sensor is so small, we were able to do this very fine task, inserting it between cloth layers, which we can’t do with other sensors, particularly optical-based sensors,” Weng says. “We were able to put it to use to do tasks that were not achievable before.”

The robots’ enhanced dexterity is down to ReSkin’s thinness and flexibility, the researchers explain, who stress the need for more research before robots are ready to handle a real laundry basket.

“It really is an exploration of what we can do with this new sensor,” Weng concludes. “We’re exploring how to get robots to feel with this magnetic skin for things that are soft, and exploring simple strategies to manipulate cloth that we’ll need for robots to eventually be able to do our laundry.”

The team presented their findings at the 2022 International Conference on Intelligent Robots and Systems.

South West News Service writer Danny Halpin contributed to this report.