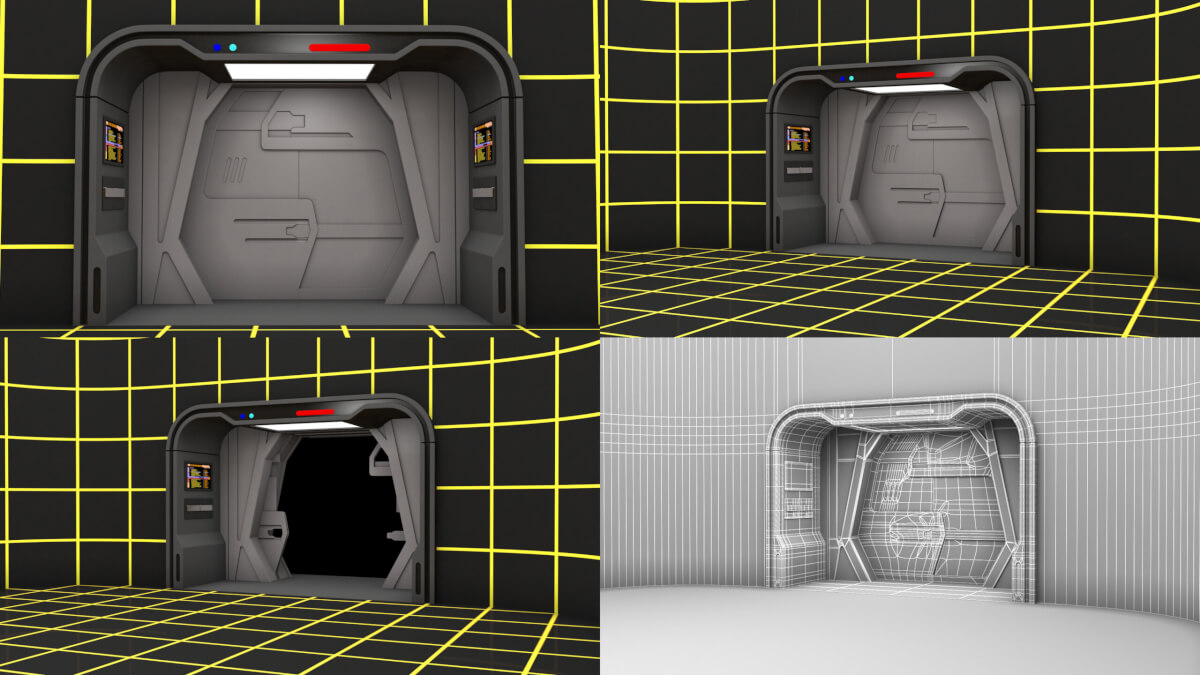

PHILADELPHIA — Remember the holodeck from “Star Trek: The Next Generation”? That virtual reality room aboard the Enterprise that could create any kind of environment you could dream up, from alien jungles to the residence of Sherlock Holmes — using nothing but voice commands? It might have been an invention of the 24th century on television, but researchers at the University of Pennsylvania have brought that sci-fi dream to life today!

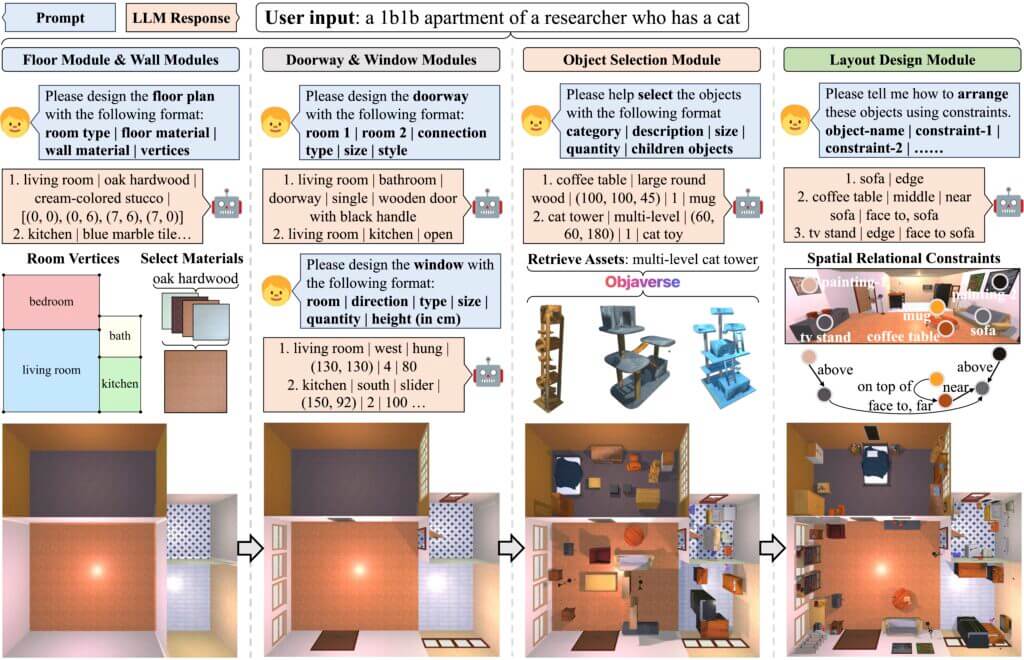

Calling their new system “Holodeck” in honor of its Star Trek origins, the Penn researchers are using artificial intelligence to analyze simple language and then generate photorealistic 3D virtual environments based on what the user requests. Simply put, just like Captain Picard could ask the holodeck for a detective’s office from the 1940s, the Penn team just can ask for, “a 1-bedroom apartment for a researcher who has a cat.” In seconds, Holodeck will generate the floors, walls, windows, and furnishings and even inject realistic clutter like a cat tower.

“We can use language to control it,” says Yue Yang, a doctoral student who co-created Holodeck, in a university release. “You can easily describe whatever environments you want and train the embodied AI agents.”

Training robots in virtual spaces before unleashing them in the real world is known as “Sim2Real.” Until now, however, generating those virtual training grounds has been a painfully slow process.

“Artists manually create these environments,” Yang explains. “Those artists could spend a week building a single environment.”

With Holodeck, researchers can rapidly create millions of unique virtual spaces to train robots for any scenario at a tiny fraction of the previous time and cost. This allows the robots’ AI brain – a neural network – to ingest massive datasets essential for developing true intelligence.

“Generative AI systems like ChatGPT are trained on trillions of words, and image generators like Midjourney and DALLE are trained on billions of images,” says Chris Callison-Burch, an associate professor of computer science at Penn who co-led the project. “We only have a fraction of that amount of 3D environments for training so-called ‘embodied AI.’ If we want to use generative AI techniques to develop robots that can safely navigate in real-world environments, then we will need to create millions or billions of simulated environments.”

So, how does Holodeck conjure these virtual worlds from mere text descriptions? It harnesses the incredible knowledge contained in large language models (LLMs) – the same AI systems that power conversational assistants like ChatGPT.

“Language is a very concise representation of the entire world,” Yang says.

Language models turn out to have a surprisingly high degree of knowledge about the design of spaces. Holodeck essentially has a conversation with the LLM, carefully breaking down the user’s text into queries about objects, colors, layouts, and other parameters. It then searches a vast library of 3D objects and uses special algorithms to arrange everything just so – ensuring objects like toilets don’t float mid-air.

To evaluate Holodeck’s scene quality, the researchers had students compare environments created by their system to those from an earlier tool called ProcTHOR. The students overwhelmingly preferred Holodeck’s more realistic, coherent spaces across a wide range of settings, from labs to locker rooms to wine cellars.

However, the true test of Holodeck’s capabilities is whether it can actually help train smarter robots. The researchers put this to the test by generating unique virtual environments using Holodeck and then “fine-tuning” an AI agent’s object navigation skills in those spaces.

The results were extremely promising. In one test, an agent trained in Holodeck’s virtual music rooms succeeded at finding a piano over 30 percent of the time. An agent trained on similar scenes from ProcTHOR only found the piano about six percent of the time.

“This field has been stuck doing research in residential spaces for a long time,” says Yang. “But there are so many diverse environments out there — efficiently generating a lot of environments to train robots has always been a big challenge, but Holodeck provides this functionality.”

“The ultimate test of Holodeck is using it to help robots interact with their environment more safely by preparing them to inhabit places they’ve never been before,” adds Mark Yatskar, an assistant professor of computer science who co-led the work.

From homes to hospitals, offices to arcades, Holodeck allows AI researchers to create virtually unlimited training grounds for robots using just simple text commands. Much like the technology from Star Trek that could synthesize any object on demand or recreate any room from any era, Holodeck can synthesize entire worlds on demand.

So, thanks to AI and Star Trek, fantasy is now (virtual) reality.

In June, the researcher team will present Holodeck at the 2024 Institute of Electrical and Electronics Engineers (IEEE) and Computer Vision Foundation (CVF) Computer Vision and Pattern Recognition (CVPR) Conference in Seattle.