DURHAM, N.C. — An implant that can convert brain signals into words may provide a means of communication for those who are unable to speak. This novel device can interpret thoughts into spoken words by decoding signals from the brain’s speech center, thereby predicting the sounds a person intends to make.

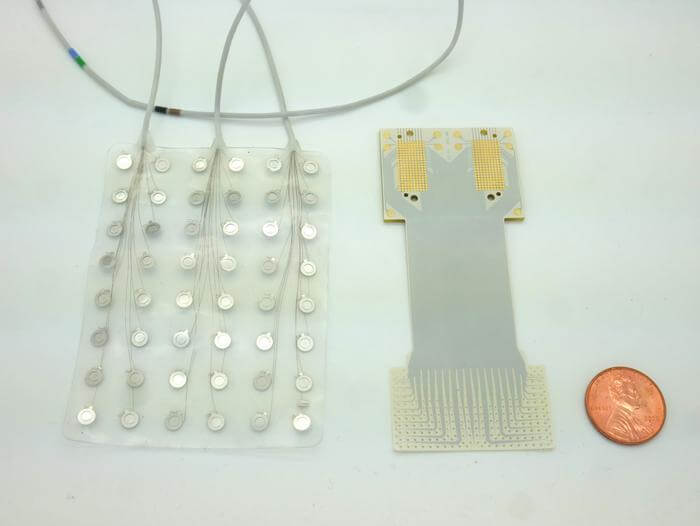

Sized similarly to a postage stamp, the implant houses 256 tiny sensors, enabling users to interface with a computer through brain activity.

Published in the journal Nature Communications, the study offers hope for individuals with “locked-in” syndrome and others for whom existing communicative technologies are frustratingly slow. Currently, top speech-decoding systems operate at about 78 words per minute, roughly half the rate of conventional speech.

“There are many patients who suffer from debilitating motor disorders, like ALS (amyotrophic lateral sclerosis) or locked-in syndrome, that can impair their ability to speak,” says Gregory Cogan, Ph.D., a professor of neurology at Duke University’s School of Medicine and one of the lead researchers involved in the project, in a media release. “But the current tools available to allow them to communicate are generally very slow and cumbersome.”

To enhance performance, the team increased the number of sensors in the device that rests on the brain’s surface. The Duke Institute for Brain Sciences is renowned for developing high-density, ultra-thin, and flexible brain sensors on medical-grade plastic.

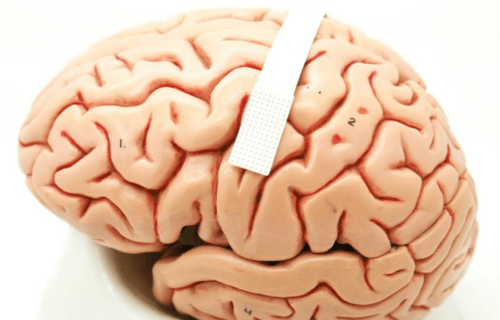

Since neurons, spaced as closely as grains of sand, exhibit significantly different activity when involved in speech coordination, it is essential to discern signals from adjacent brain cells to enable precise predictions of intended speech. The device underwent trials on four patients who were undergoing brain surgery for unrelated conditions, such as Parkinson’s disease treatment or tumor removal.

“I like to compare it to a NASCAR pit crew,” Cogan explains. “We don’t want to add any extra time to the operating procedure, so we had to be in and out within 15 minutes. As soon as the surgeon and the medical team said ‘Go!’ we rushed into action and the patient performed the task.”

The participants were presented with a sequence of nonsensical words, such as “ava,” “kug,” or “vip,” which they then vocalized. The implant captured the brain’s speech motor cortex activity, orchestrating the nearly 100 muscles involved in manipulating the lips, tongue, jaw, and larynx.

Suseendrakumar Duraivel, the study’s first author and a graduate student, analyzed the neural and speech data using a machine learning algorithm to gauge its accuracy in predicting the sounds based solely on brain activity recordings. For certain sounds and participants — for example, the “g” in “gak” — the system accurately identified the sound 84 percent of the time when it was the initial sound in a triad that constituted a nonsensical word. However, accuracy decreased when decoding sounds within or at the end of a word, and the system struggled with similar sounds, like “p” and “b.”

The overall accuracy of the decoder was 40 percent — a significant achievement, considering that comparable brain-to-speech technologies often require hours or even days of data for reference.

Remarkably, the speech decoding algorithm in this study operated with only 90 seconds of vocal data from the 15-minute test. With a recent $2.4 million grant from the National Institutes of Health, the team is now developing a wireless version of the device.

“We’re now developing the same kind of recording devices, but without any wires,” Cogan says. “You’d be able to move around, and you wouldn’t have to be tied to an electrical outlet, which is really exciting.”

“We’re at the point where it’s still much slower than natural speech,” notes co-author Dr. Jonathan Viventi in a recent Duke Magazine piece about the technology, “but you can see the trajectory where you might be able to get there.”

You might also be interested in:

- Blind woman able to see shapes again using groundbreaking brain implant

- Microchip implanted in the brain allows people to type without a keyboard

- Powerful, unexplained signals discovered coming from human brain’s white matter

South West News Service writer Jim Leffman contributed to this report.