OAK BROOK, Ill. – When it comes to detecting various lung diseases from chest X-rays, human radiologists still have the edge over artificial intelligence, a new study reveals.

The study involved researchers pitting AI against human radiologists to diagnose three specific lung diseases from over 2,000 X-ray scans of elderly patients. The results highlighted that AI tools identified a significantly higher number of false positives — incorrectly diagnosing a disease that wasn’t actually present — compared to their human counterparts.

This research reinforces the idea that while AI can play a supportive role in medical diagnoses, it’s still early in its developmental stages.

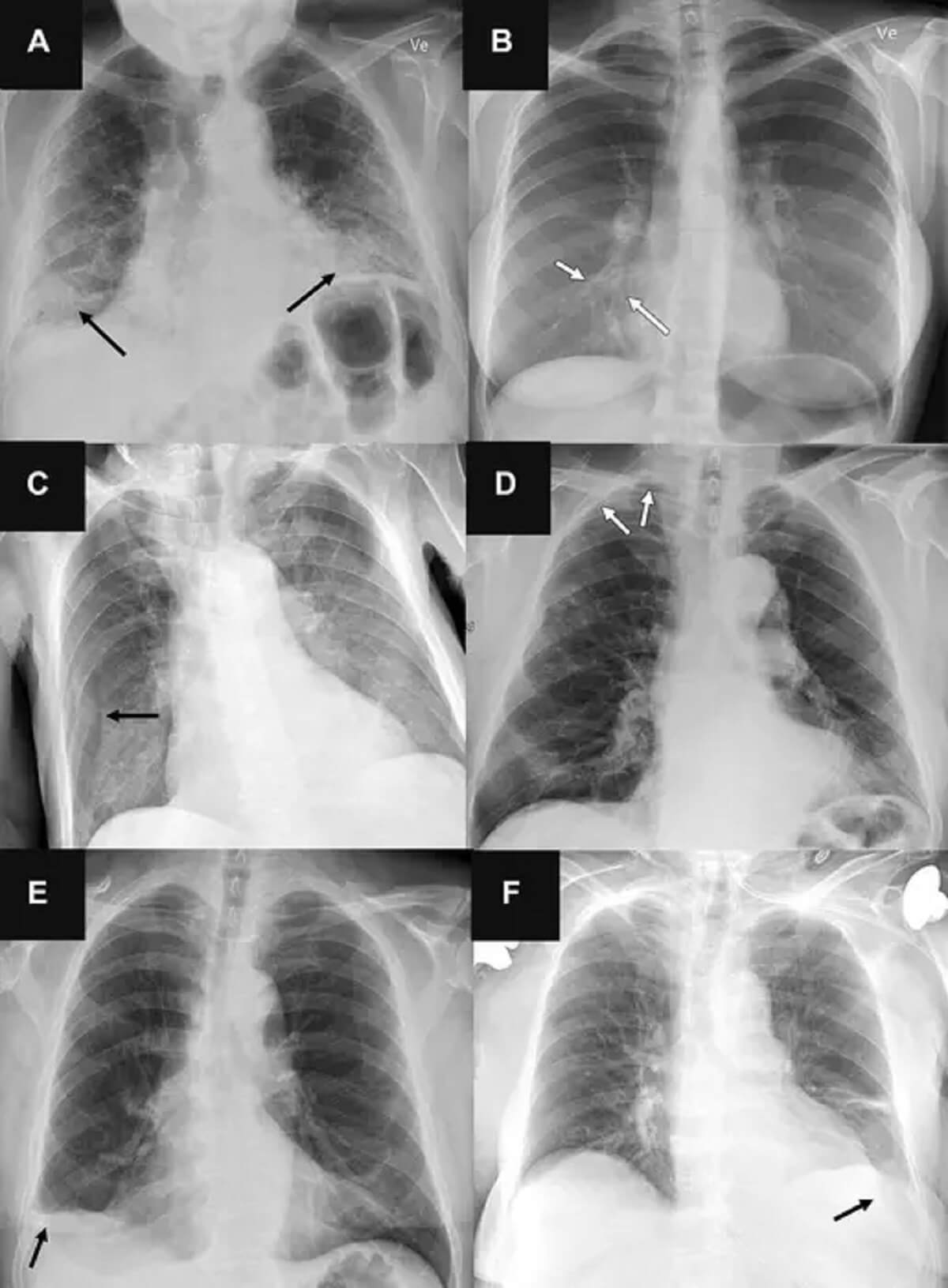

The Danish team from Herlev and Gentofte Hospital in Copenhagen compared the diagnostic skills of 72 radiologists against four different AI tools using 2,040 adult X-rays taken over two years from various Danish hospitals in 2020. From these X-rays, roughly a third (669 scans or 32.8%) presented at least one significant finding.

While approved AI tools are becoming increasingly popular due to a global shortage of radiologists, their real-world efficacy remains a topic of debate. The chest X-rays in the study were examined for three prevalent conditions: airspace diseases (like pneumonia or lung edema), pneumothorax (collapsed lung), and pleural effusion (fluid buildup around the lungs).

The AI tools posted sensitivity rates between 72 and 91 percent for airspace diseases, 63 to 90 percent for pneumothorax, and 62 to 95 percent for pleural effusion. However, they generated more false positives than the human radiologists, especially when identifying airspace diseases.

“Chest radiography is a common diagnostic tool, but significant training and experience is required to interpret exams correctly,” says Dr. Louis Plesner, the study’s lead researcher and a radiologist at Herlev and Gentofte Hospital, in a media release. “While AI tools are increasingly being approved for use in radiological departments, there is an unmet need to further test them in real-life clinical scenarios.”

The scientists stress that while AI can aid in identifying regular chest X-rays, relying solely on it for diagnoses is premature. The team emphasizes that radiologists often outshine AI in diverse patient scenarios, suggesting that AI’s current state is far from ready for individual diagnoses. They believe that AI’s next iteration, which could potentially combine image reviews with patients’ clinical histories and previous imaging, would be far more effective, though such systems are yet to be developed.

“An AI system operating independently with the current rate of false positives is impractical. While AI can identify regular chest X-rays, it shouldn’t be solely trusted for making diagnoses,” concludes Dr. Plesner.

The study is published in the journal Radiology.

South West News Service writer James Gamble contributed to this report.