SEATTLE — A groundbreaking smart speaker system, that uses transformative shape-changing technology, allows users to “mute” specific areas of a room. This advanced sound system utilizes self-deploying microphones to delineate rooms into “speech zones,” adeptly tracking the location of individual speakers.

The quest to locate and control sound, such as isolating the speech of one person in a crowded room, has previously stumped researchers, particularly in the absence of visual aids from cameras. Utilizing sophisticated deep-learning algorithms, researchers from the University of Washington say this innovative system provides users the capability to mute specific areas and differentiate simultaneous conversations, even between individuals with similar voice timbres situated adjacently.

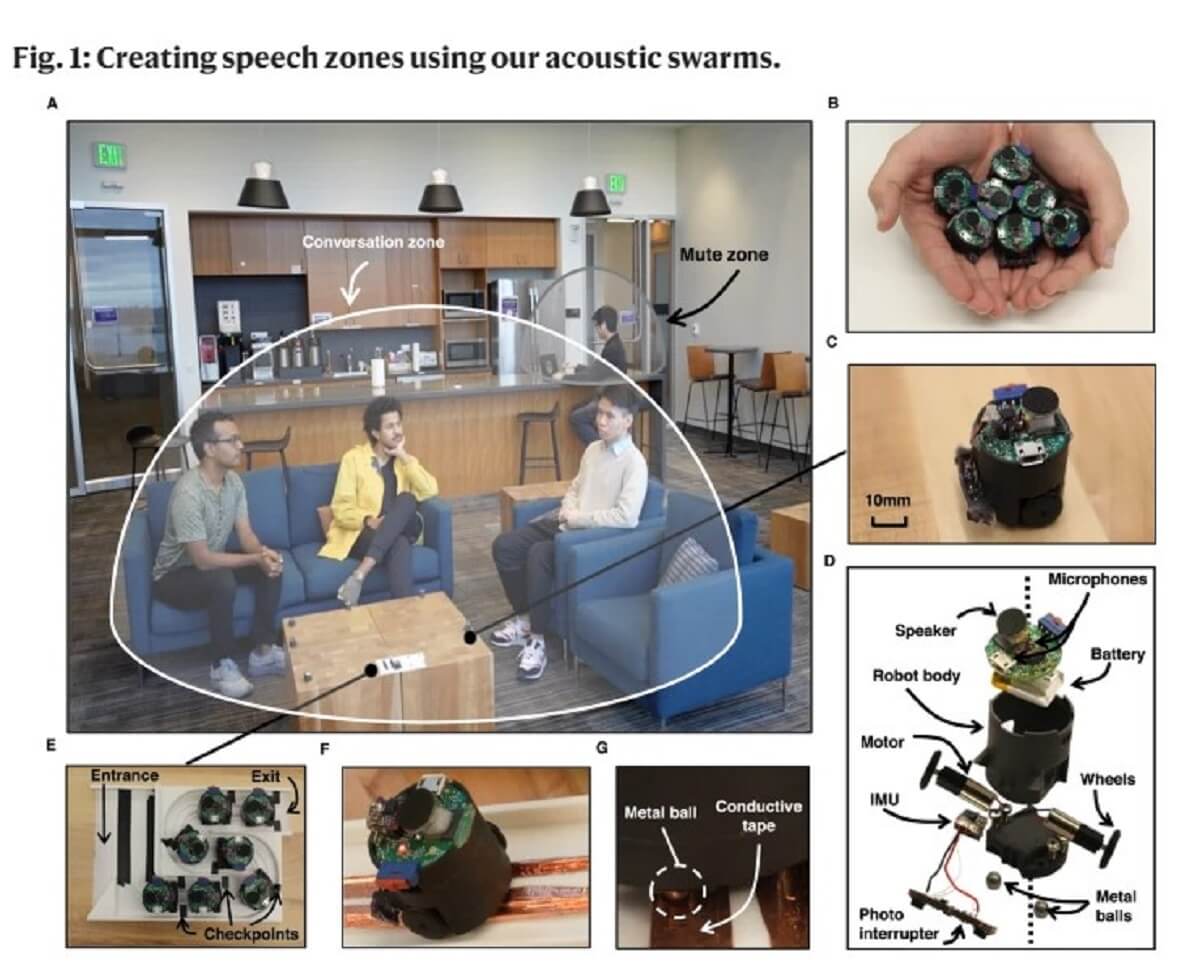

The team of researchers illustrated how the microphones — akin to a fleet of Roomba speakers, each roughly an inch in diameter — automatically deploy from and retract to a charging station. This feature enables the versatile system to transition between various environments and configure autonomously.

The scientists propose that such a system could replace a central microphone in a conference room setting, thereby enhancing the control over in-room audio.

“If I close my eyes and there are 10 people talking in a room, I have no idea who’s saying what and where they are in the room exactly. That’s extremely hard for the human brain to process,” says study co-lead author Malek Itani, a doctoral student at UW, in a university release. “Until now, it’s also been difficult for technology. For the first time, using what we’re calling a robotic ‘acoustic swarm,’ we’re able to track the positions of multiple people talking in a room and separate their speech.”

Previous projects working on robotic swarms have predominantly relied on overhead or on-device cameras, projectors, or specialized surfaces. However, the system designed by the University of Washington team pioneers in accurately deploying a robot swarm leveraging solely sound.

This prototype comprises seven petite robots that can autonomously adjust to tables of varying dimensions. As they detach from their charging stations, each robot emanates a high-frequency sound, analogous to echolocating bats, to navigate and circumvent obstacles, positioning themselves optimally for maximum sound control and precision.

This automated deployment allows the robots to strategically position themselves, ensuring enhanced sound control. They disseminate as widely as possible to facilitate easier differentiation and localization of speaking individuals.

“This system enables the isolation of any of the voices from four people engaged in two different conversations and the location of each voice in a room,” says co-lead author Tuochao Chen, also from the University of Washington.

The system, tested in offices, living rooms, and kitchens, proved adept at distinguishing voices within 1.6 feet of each other 90 percent of the time in diverse environments. However, it faces limitations in real-time communications like video calls due to its processing speed.

The researchers speculate that the evolution of this technology may see acoustic swarms integrated into smart homes, allowing specific vocal commands in “active zones.”

The team acknowledges the potential misuse of such technology and has, therefore, incorporated safety guards. The robots process all the audio locally to maintain privacy and are easily noticeable with blinking lights to signal their activation.

“If two groups are conversing adjacent to each other and one is having a confidential discussion, our system can ensure that their conversation remains private,” concludes Itani.

The study is published in the journal Nature Communications.

South West News Service writer Stephen Beech contributed to this report.