LINKÖPING, Sweden — Have you heard the news? Research from Linköping University, Sweden, and the Oregon Health and Science University is calling into question old prevailing theories on how human hearing works. Scientists believe their study on how humans experience music and speech could even lead to better cochlear implant technology.

It’s well-documented that humans are social creatures by nature. Our sense of hearing helps us experience and distinguish voices and human speech. Sound arriving at the outer ear is carried via the eardrum to the spiral-shaped inner ear (cochlea). Sensory cells for hearing (outer and inner hair cells) are found within the cochlea. Upon contact, sound waves cause the “hairs” of the inner cells to bend, which sends out a signal via the nerves to the brain. From there our minds interpret the sounds we hear.

Key takeaways:

- Researchers are challenging traditional theories on human hearing, particularly in relation to low-frequency sounds like speech and music.

- Traditional understanding posits that each sensory cell in the cochlea has an “optimal frequency” it responds to; however, this new research indicates that many cells react simultaneously to low-frequency sounds, providing a more robust hearing experience.

- This finding is significant for the design of cochlear implants, suggesting that changing stimulation methods at low frequencies could more closely mimic natural hearing, potentially improving the user experience.

- The research provides new insights into the role of outer hair cells in low-frequency hearing, challenging previous beliefs and opening avenues for better understanding and treating hearing loss, especially at low frequencies.

For roughly the past century, scientists have believed that each individual sensory cell boasts its own “optimal frequency,” or a measure of the number of sound waves per second, that the hair cell will respond to in the strongest possible manner. According to that theory, a sensory cell with an optimal frequency of 1000 Hz would respond in a much weaker manner to sounds with a frequency slightly lower or higher.

Additionally, it’s been largely assumed for decades that all parts of the cochlea work similarly. Now, though, this study reports this is not the case for sensory cells that process sound with frequencies under 1000 Hz (deemed a low-frequency sound). Vowel sounds emitted during human speech fall under this classification.

“Our study shows that many cells in the inner ear react simultaneously to low-frequency sound. We believe that this makes it easier to experience low-frequency sounds than would otherwise be the case, since the brain receives information from many sensory cells at the same time,” says Anders Fridberger, professor in the Department of Biomedical and Clinical Sciences at Linköping University, in a statement.

Study authors posit that this construction results in a more robust hearing system. Even if some sensory cells are damaged, plenty of others will remain that can still send nerve impulses to the brain.

Besides vowel sounds during human speech, many other sounds associated with music lie in the low-frequency region as well: Middle C on a piano, for example, has a frequency of 262 Hz. Study authors add that this work may one day prove incredibly helpful for people with severe hearing impairments. Right now, the best available treatment for such individuals is a a cochlear implant, meaning electrodes are placed into the cochlea.

“The design of current cochlear implants is based on the assumption that each electrode should only give nerve stimulation at certain frequencies, in a way that tries to copy what was believed about the function of our hearing system. We suggest that changing the stimulation method at low frequencies will be more similar to the natural stimulation, and the hearing experience of the user should in this way be improved,” Prof. Fridberger concludes.

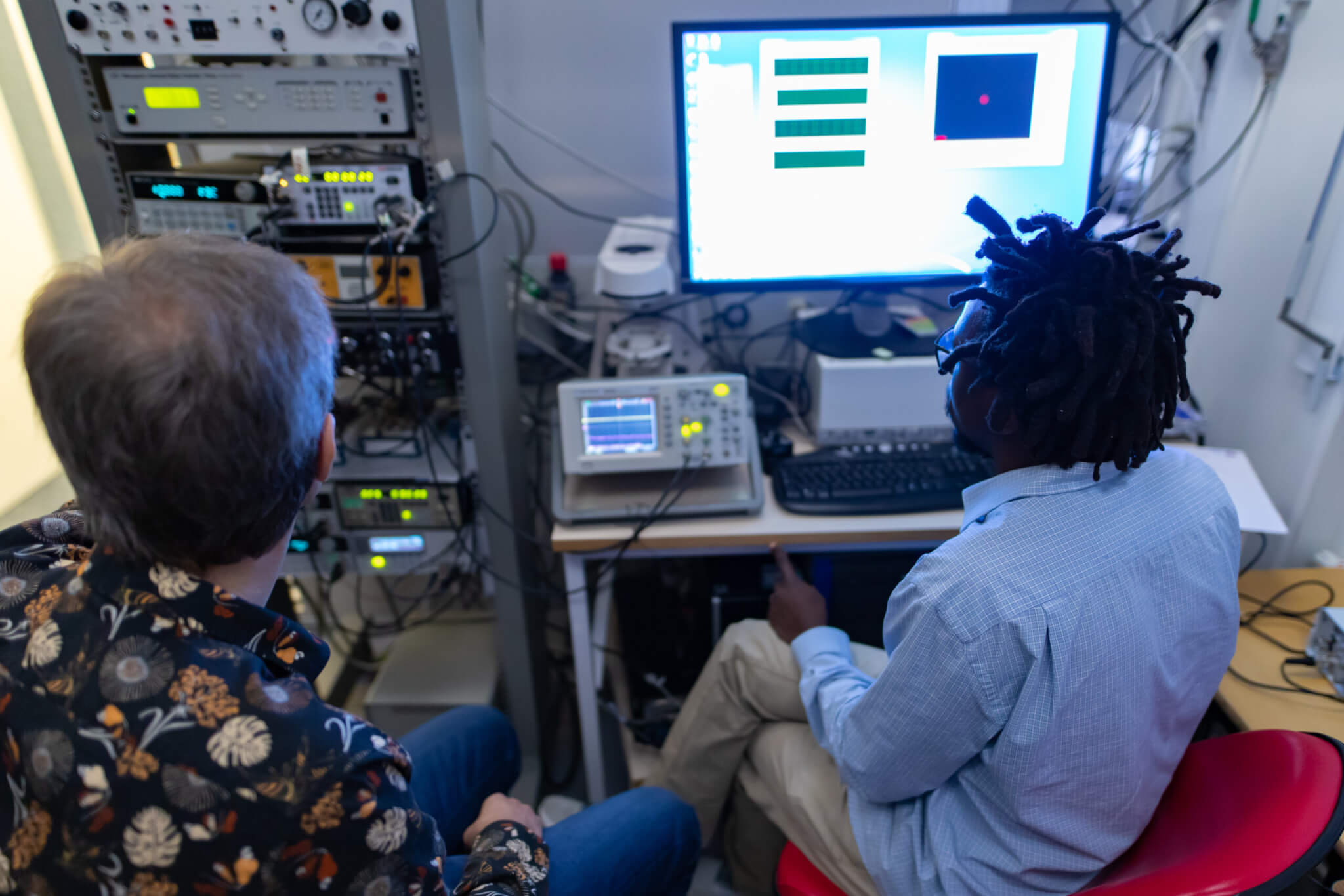

The scientists employed a cutting-edge Thorlabs Telesto III OCT system, a sophisticated imaging tool, to delve deep into the hearing mechanisms of guinea pigs, whose low-frequency hearing closely resembles that of humans. By skillfully directing infrared light into the inner ear using a tiny mirror, they managed to reduce surgical trauma, increasing the accuracy of their findings.

Their discoveries were startling. When exposed to sounds ranging from 80 to 1000 Hz, the inner ear structures of guinea pigs responded in ways that contradicted previous beliefs. The scientists found that at certain frequencies and volumes, the expected responses were flipped – the apical (near the top of the cochlea) parts of the ear reacted more than the basal (lower) parts, contrary to what the standard Greenwood model of hearing predicted.

The plot thickened when the team introduced furosemide, a diuretic known to affect ear cell function. After administering the drug, they observed a shift in the peak response frequencies in different parts of the ear. Intriguingly, at the apical part, the response paradoxically increased, while the best frequency remained stable.

In a surprising twist, the researchers also conducted postmortem studies, which revealed significant shifts in frequency responses after the death of the animal. This observation provided further evidence of the crucial role outer hair cells play in hearing.

These findings are more than mere scientific curiosities. They suggest that in low-frequency hearing, the motor activity of outer hair cells plays a crucial role in distributing sound-evoked responses over a larger area than previously thought. This challenges the long-standing belief that sound responses are concentrated in smaller regions of the cochlea.

While these studies were conducted on guinea pigs, the implications for human hearing are profound. This research opens new avenues for understanding hearing loss and developing treatments, particularly for low-frequency hearing impairments. Moving forward, study authors are planning on examining how their new knowledge can be applied in practice. One planned future project will focus on new methods to stimulate the low-frequency parts of the cochlea.

The study is published in Science Advances.

Huh?

Most commonly, I believe, hearing loss first affects the consonant clarity not the vowel sounds. Certainly in my case, I have to guess (work out) what the consonants are. Deaf people are slow to respond as they are guessing what it was that you said.

The old joke : Message sent over poor radio reception

“Going to a dance, send three and fourpence” was actually “Going to advance send reinforcements.”

I and millions of other old people feel his pain.